Element AI vs Custom AI Models: Which Scales Better for SMB Automation?

9/9/20256 min read

Choosing between element ai and custom AI models can feel like a trade-off between speed and control. This guide breaks down how each approach scales for SMB automation, from cost and complexity to accuracy and governance. You’ll leave with a simple decision framework and real use cases you can deploy now.

Element AI vs Custom AI: What SMBs Really Get

When SMB leaders say “element ai,” they usually mean off-the-shelf platforms like ServiceNow’s Element AI heritage, OpenAI, Azure Cognitive Services, and Google Vertex AI. These AI solutions package artificial intelligence, machine learning, and AI software into ready-to-use services across NLP, computer vision, and speech—no heavy data science or software development required. For busy teams, that translates to hours or days to launch, managed cloud computing and cloud services, and built-in security that supports digital transformation without major hiring. The trade-offs: per-use fees, rate limits, and less control over algorithms, data management, and model behavior.

Custom AI models take a different path. You fine-tune large language models, build task-specific classifiers, or assemble domain-trained pipelines tailored to your data analytics and operations. This approach demands labeled data, MLOps rigor, and deep familiarity with AI algorithms, modeling, and data processing. While it takes weeks or months to deploy, SMBs gain domain accuracy, IP ownership, and cost control at scale—especially when event volume grows and performance and compliance matter. In short, element ai is fastest to value for general automation, while custom models win when precision, privacy, and long-term unit economics are critical.

“element ai”: Off‑the‑shelf platform AI

Off-the-shelf platforms ship prebuilt NLP, computer vision, and speech recognition that slot into your stack via APIs, automation tools, and analytics platforms. Example: a DTC brand connects a help-desk chatbot in a day to deflect FAQs, using natural language processing for intent, machine vision for image recognition of products, and business intelligence dashboards for data analysis of ticket trends.

“Custom AI models”: Fine‑tuned and domain‑trained

Custom stacks combine transfer learning, feature engineering, and ensemble learning to address domain drift and nuanced decision making. Example: a finance ops team trains a predictive analytics model for anomaly detection in invoices, tuning cost-sensitive thresholds and ROC-AUC to reduce false positives, then deploys it on their secure cloud to meet compliance and data governance needs.

Cost, Scalability, and Implementation Complexity

Cost profiles diverge early. Off-the-shelf element ai typically uses pay-per-use/API pricing that’s favorable up to modest volumes (e.g., around 10k events/month). You avoid big upfront costs, and managed infrastructure handles scaling, burst traffic, and reliability. For text analysis, token usage fees can be predictable, while image and speech workloads hinge more on media length and image recognition depth. However, as volumes approach 100k–1M+ events/month, subscription tiers, rate limits, and per-call fees can erode margins. That’s when custom models—and batch processing strategies—start paying off.

Custom AI requires upfront investment—data labeling, model training, evaluation pipelines, and deployment. Teams need MLOps maturity: monitoring for data drift and concept drift, retraining cadence, and runbooks. The payoff is lower marginal cost at scale via amortized compute, cloud compute reservations, and automation systems tuned to your workload. Done right, advanced analytics and computational intelligence let you control latency SLOs and unit costs while keeping data in your environment.

Cost breakpoints: Per‑use vs amortized compute

Below ~10k events/month, per-use pricing is often cheaper due to zero setup and minimal DevOps. Around 100k+ events/month, custom pipelines with caching and batch inference can cut cost per action by 30–70%. Example: an outreach workflow swaps generic generative calls for a retrieval‑augmented generation step plus a light custom classifier, reducing token spend by 50% while maintaining personalization.

Integration depth and MLOps overhead

element ai integrates quickly with HubSpot, Shopify, Slack, Zapier/Make, and CRMs using webhooks, making it ideal for teams with limited engineering resources. Custom stacks tie into data lakes and feature stores and demand evaluation metrics (precision/recall, ROC‑AUC) and go/no‑go pilots. Example: a 30‑60‑90 day plan that starts with a shadow test, promotes to canary, then scales after hitting accuracy and cost-per-ticket targets.

Performance, Accuracy, and Governance

Prebuilt models excel at general tasks: SEO drafting with prompt libraries, baseline email outreach, voice memo transcription, and basic ticket routing. But specialized or regulated domains (healthcare, finance) often need domain-trained models to capture nuances, manage bias, and meet audit requirements. Custom stacks leverage deep learning, neural networks, and ensemble strategies to push precision and recall higher where error costs are real. They also support explainability, interpretable decision audits, and subgroup fairness testing.

Governance and portability matter as you scale. Vendor lock-in from per-use platforms can slow innovation or inflate costs. Custom models allow portability patterns like ONNX exports, containerization, and API abstraction layers, useful when switching clouds or avoiding a single analytics platform dependency. With stronger control over data minimization, PII handling, and audit trails, custom deployments align better with SOC 2/ISO expectations—especially when business automation touches finance ops or HR workflows.

Where custom wins on accuracy

Tasks like predictive lead scoring, nuanced intent classification, fraud detection, and cold‑start recommendation systems benefit from domain features and continual learning. Example: a B2B team tunes thresholds to lift precision from 0.72 to 0.86 on high‑value leads, improving SDR productivity and lowering cost per meeting by 25%.

Governance, ethics, and portability

Custom models enable Explainable AI, bias testing across subgroups, and granular audit logs—critical for regulated sectors. Example: an internal model card documents training data, known limitations, and monitoring SLOs; the model is packaged in containers with an ONNX fallback to keep portability despite cloud or API changes.

Decision Framework + Use Cases for SMB Automation

Use this simple filter: If you need speed, low lift, and general-purpose workflows across NLP, computer vision, and speech, element ai scales better. If you need domain accuracy, higher volumes, and control over cost, data, and integrations, custom models scale better. Many SMBs succeed with a hybrid—off-the-shelf LLMs for text generation plus custom classifiers and rules for decision making, caching, and guardrails. This design balances AI technology flexibility and cost while keeping sensitive workloads in your environment.

Across marketing and ops, a hybrid approach lets you mix AI applications with automation solutions that match ROI to complexity. Combine automation software, AI algorithms, and pattern recognition with practical guardrails: confidence thresholds, fallbacks, and human‑in‑the‑loop QA. You’ll still get the benefits of machine intelligence and business intelligence without taking on unnecessary risk or engineering overhead.

Quick scorecard to choose your path

We recommend scoring four areas 1–5 and picking the highest total: volume/variance, compliance risk, integration depth, latency/cost SLOs. Example: a support team scoring “3, 2, 2, 2” starts with element ai; a finance team scoring “5, 5, 4, 4” builds custom.

Volume and variance: Higher and spikier data benefits from custom pipelines and batch inference to control unit cost.

Compliance and privacy: Sensitive data argues for private deployment, data minimization, and explainability tooling.

Integration depth: Complex CRMs, data lakes, and IoT streams favor custom modeling and API abstraction layers.

Latency and cost SLOs: Tight SLOs and big data workloads need tuned architectures and advanced analytics.

High‑impact SMB use cases

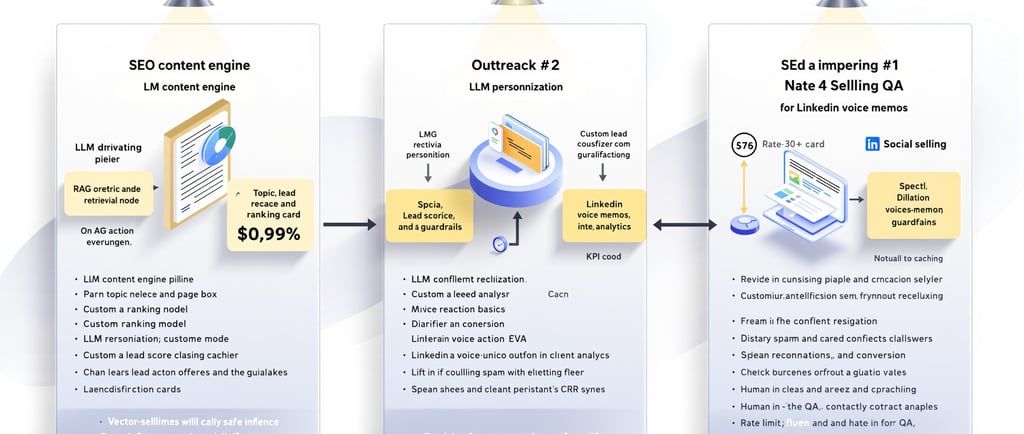

SEO content engine: Start with an off‑the‑shelf LLM and editorial guardrails; layer in RAG and a custom ranking model once you hit scale. Example: our 24/7 AI SEO Blog System pairs prompt libraries with retrieval to improve topical relevance and on‑page data analysis for ranking. For outreach, combine an LLM with a custom lead score to reduce spam and lift conversions; tools like our Instagram DM Assistant automate personalized DMs at volume while honoring rate limits. For social selling, speech recognition plus text analytics powers LinkedIn voice memos; our Personalized Voice Memo Assistant for LinkedIn handles transcript quality, diarization basics, and CRM sync to streamline follow‑up.

Practical CTA: If you want a fast start with measurable outcomes, deploy one proven automation this week—such as our 24/7 AI SEO Blog System—and baseline your cost per lead and ranking lift. You can add custom classifiers later as volume grows.

Bottom line: element ai scales best when you need speed-to-launch, lower upfront costs, and general-purpose automation; custom AI models scale best when you require domain accuracy, higher event volumes, and tighter control of data, unit costs, and compliance. Start hybrid: measure precision/recall and cost per action for one workflow, then set a threshold—like 100k events/month or a target CPA—at which you’ll expand your custom stack.