How Do AI Detectors Work? Machine Learning and Computer Vision

Zachary Locas

9/13/20257 min read

If you’ve ever asked “how do AI detectors work,” this guide breaks it down in plain English. We’ll show how artificial intelligence detectors analyze text, images, audio, and sensor data to spot patterns, make decisions in real time, and stay resilient against attacks. You’ll leave with a framework you can apply to content integrity, fraud prevention, safety, and privacy-first deployment.

What Exactly Is an “AI Detector”?

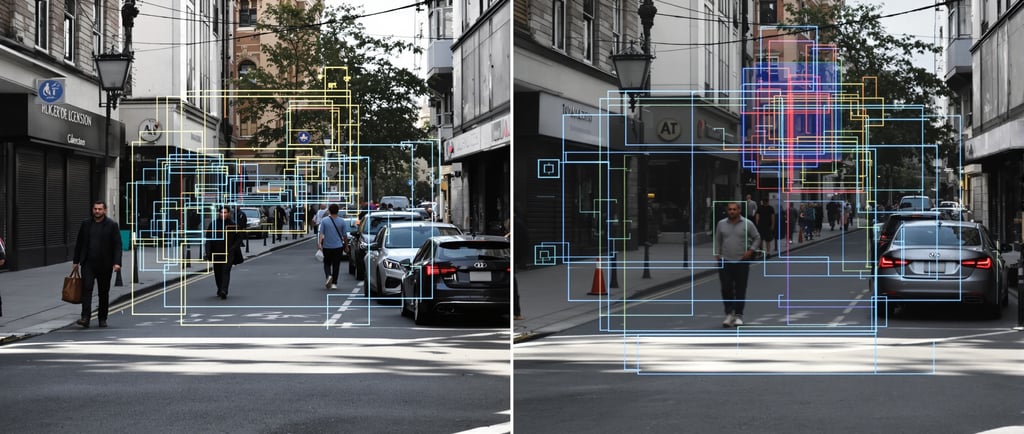

AI detectors are trained classifiers that decide whether an input is human- or machine-made, or whether it contains a specific object, behavior, or risk pattern. In text, they look for linguistic signals like perplexity and burstiness; in images and video, they rely on computer vision, image recognition, and object detection; in audio and signals, they use speech analysis, voice recognition, and signal processing. Across modalities, detectors convert raw sensory inputs into features, then use pattern recognition to score likelihoods and support a fast, auditable decision-making process.

Under the hood, deep learning models and other machine learning algorithms learn from labeled examples (supervised learning) or discover structure in unlabeled data (unsupervised learning). This training process depends on data labeling quality, domain coverage, and ongoing data analysis to prevent drift. In operations, detectors run on GPUs, TPUs, or NPUs at the edge or in the cloud, combining data filtering, data segmentation, and data interpretation to power real-time monitoring. Common business cases include fraud detection in transactions, threat detection in video analysis, and biometric identification for access control—each with different accuracy, latency, and privacy requirements.

Detection vs. Recognition vs. Identification

Detection asks “Is something there?” Recognition asks “What is it?” Identification asks “Who is it?” Image classification labels an image; object detection localizes it with bounding boxes; biometric identification matches a face or voice to a person. Clarity on these terms prevents scope creep and helps select the right model family and evaluation metrics.

Example: A retail camera uses object detection to count carts (what and where), motion detection to flag after-hours activity (is anything moving?), and biometric authentication for employee entry (who is it, with consent).

Anomaly Detection and Behavioral Analytics

Beyond known patterns, detectors also find “unknown unknowns.” Anomaly detection and behavioral analysis highlight outliers in time-series analysis—think sudden spending spikes, unusual machine vibration patterns, or atypical login locations. These methods blend predictive analytics with pattern matching, often correlating multiple signals for robust decisions.

Example: In payments, the system uses data correlation across devices, IPs, and purchase histories to raise a fraud prevention alert when a high-value transaction deviates from a customer’s behavioral biometrics.

How do AI detectors work: From pixels and tokens to decisions

Most detectors follow a shared pipeline. First is data capture using cameras, microphones, and other sensor technology, often with analog signal processing at the edge to reduce noise before digitization. Next comes preprocessing and data processing—denoising, normalization, and data filtering to remove artifacts and improve signal-to-noise. Feature extraction maps raw data into useful characteristics: edges and textures for computer vision; spectrograms for voice recognition; n-grams and syntax for natural language processing.

Then comes training. With supervised learning, the model learns from labeled examples; with unsupervised learning, it learns structure for clustering or anomaly detection. For AI text detection, classifiers track perplexity (how predictable the text is) and burstiness (variation in sentence length and structure) because many AI systems produce lower-perplexity, less-bursty text. In deployment, real-time monitoring balances computational power and latency.

Systems may run on edge devices for speed, with batched inference or caching in the cloud for scale. The result is a score and action—alert a human, block a transaction, or log for later review—anchored to thresholds aligned to business risk.

Linguistic Signals: Perplexity and Burstiness

AI-generated text tends to be more predictable (low perplexity) and shows steadier sentence patterns (low burstiness), while human writing is spikier and more surprising. Detectors combine these features with contextual understanding—topic shifts, entity consistency, and sentiment analysis—to reduce false positives and bolster confidence.

Example: An academic platform flags a 1,200-word essay with unusually uniform sentence lengths and very low perplexity. A reviewer sees the score, samples quoted passages, and requests a rewrite to maintain originality standards.

Feature Extraction Across Modalities

Vision systems learn hierarchical features via convolutional filters; audio systems analyze frequency bands; and IoT detectors evaluate vibration and temperature streams. Pattern recognition aligns these features with learned templates, while pattern matching can apply rules for known signatures, like a specific logo or sound.

Example: A factory camera uses visual recognition to spot missing bolts (image classification) and object detection to localize defects on a conveyor. It pairs this with sensor fusion from accelerometers to trigger predictive maintenance before a breakdown.

The Models Behind the Magic: Neural Networks and Deep Learning

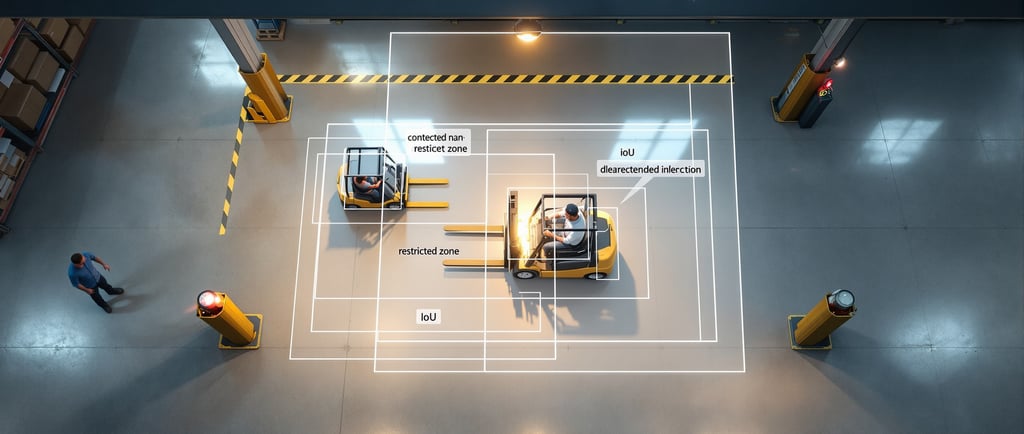

Computer vision detectors commonly use convolutional neural networks (CNNs) and modern detection algorithms like R-CNN, Fast/Faster R-CNN, SSD, YOLO, and vision Transformers. These models learn to transform raw pixels into feature maps, then produce bounding boxes and class scores. Techniques such as non-maximum suppression reduce duplicate boxes, while IoU (intersection-over-union) evaluates box quality. For NLP, Transformers capture long-range dependencies in text, improving detection of stylistic patterns and contextual cues in AI-generated content.

Performance depends on algorithm training, data diversity, and deployment choices. Quantization and pruning shrink deep learning models for lower-latency inference on edge devices, while batching, caching, and compilers accelerate throughput in the cloud. Sensor fusion—combining cameras with radar or LiDAR—improves robustness in poor lighting or occlusion. The same toolkit supports activity recognition, speech analysis, facial recognition, emotion recognition, and video analysis tasks, making detectors versatile across industries.

CNNs and Object Detection in Practice

CNN-based detectors translate images into layered features that respond to edges, textures, and shapes. YOLO and SSD prioritize speed; Faster R-CNN trades speed for higher accuracy, useful when safety is paramount.

Example: A warehouse safety system runs YOLO at 30 FPS to detect forklifts and people within shared zones. When IoU exceeds a threshold for a human in a restricted area, the system triggers a visual alert and slows nearby equipment.

Transformers for Text and Vision

Transformers model long-range relationships in sequences, powering state-of-the-art NLP and visual recognition. In detection, they excel at nuanced language cues and global image context, supporting more reliable classifications.

Example: A content integrity team fine-tunes a Transformer to classify AI-generated posts, blending perplexity features with topic coherence. A vision Transformer helps the same team spot manipulated images during a brand protection sweep.

Measuring Accuracy, Security, and Ethics

You can’t manage what you can’t measure. Teams track precision, recall, and F1-score to balance false positives and false negatives; ROC curves show trade-offs across thresholds; and mean average precision (mAP) summarizes object detection accuracy across classes. Calibration ensures scores reflect true probabilities, which is essential for setting action thresholds in fraud detection, threat detection, and error detection. Robustness testing simulates occlusion, lighting changes, and domain shift, supported by data augmentation and periodic retraining to maintain performance.

Security and governance matter just as much. Adversarial attacks—from spoofed faces to subtly perturbed inputs—can fool detectors, so liveness detection and multi-sensor checks are crucial. In biometric identification and behavioral biometrics, privacy-by-design practices like encryption, role-based access controls, and audit trails protect sensitive data. Bias audits confirm demographic performance parity, and strict consent and retention policies guide data processing. When correlating signals across sources, be considerate: data correlation boosts accuracy, but it must be done transparently and lawfully.

Evaluation Metrics and Thresholding

Metrics guide the decision-making process. If precision is 95% but recall is 80%, you’re catching most bad cases cleanly but missing some; if recall is 95% and precision is 80%, you’ll alert more often and need human review. mAP helps compare models on image classification and object detection at different IoU thresholds.

Example: A bank tunes thresholds so high-risk scores auto-block, medium scores queue for manual review, and low scores pass. The team tracks weekly F1 and adjusts thresholds as seasonality shifts behavior.

Adversarial Risks and Privacy-by-Design

Attackers may use presentation attacks (printed faces, replays) or adversarial attacks (imperceptible perturbations) to bypass systems. Combine liveness checks, challenge-response prompts, and multi-factor signals to raise the bar. Pair this with strong governance—consent, minimization, encryption in transit and at rest—to maintain trust.

Example: A building access system rejects a high-quality face photo by requiring a blink and slight head turn, then verifies via voice recognition before unlocking doors.

Where This Drives ROI: Practical Use Cases for Decision-Makers

In security and surveillance, detectors deliver real-time monitoring for threat detection, with video analytics identifying loitering, perimeter breaches, and suspicious motion patterns. In manufacturing, computer vision supports quality control and predictive maintenance by spotting defects and correlating them with sensor data to preempt failures. In commerce, fraud prevention models scan transaction streams for anomalies using time-series analysis and contextual understanding, then route high-risk cases for review.

Marketers benefit from brand safety and sentiment analysis that flags risky placements and analyzes creative performance. Healthcare imaging applies anomaly detection to triage scans, while smart cities optimize traffic via object detection on intersections. Across scenarios, the combination of neural networks, computational power at the edge, and clear governance turns detectors into reliable, auditable systems that scale.

Deployment Patterns That Scale

Choose edge for low latency, cloud for elasticity, or hybrid for a balance. Use pruning, quantization, and efficient runtimes to meet SLAs, and maintain a drift detection plan with ongoing model updates.

Example: A retailer runs in-store vision models on edge boxes for instant alerts, while a cloud service performs weekly data interpretation to retrain models on fresh footage.

Conclusion: The short answer to “how do AI detectors work” is this: they transform raw inputs into features, score them with trained classifiers, and act—while continuously retraining to stay accurate and fair. As you evaluate solutions, pick one metric to track weekly (such as F1 for text detection or mAP for object detection) and pair it with a drift check to keep performance steady over time.

If you’re scaling trustworthy content and analytics, Use AI Media can help you build systems that balance accuracy, speed, and governance. For hands-off content that stays human and on-brand, explore our 24/7 AI SEO Blog System or get a single, rank-ready article with our High-Impact SEO Blog. We’ll help you operationalize AI responsibly—without sacrificing results.